ai-driven agency, Insights

AI Isn’t the Strategy — It’s the Tool: Our Principles for Responsible AI Use

Artificial intelligence is everywhere. From content generation to customer research, marketing automation to product design — AI is reshaping how digital teams operate. But with all its power, AI still isn’t a strategy. It’s a tool. And like any powerful tool, how you use it matters.

At Daylight, we believe responsible AI use doesn’t just protect our clients — it leads to better, more impactful work. As a digital product and strategy agency, we’re not in the business of chasing hype. We’re in the business of solving problems, building great brands, and helping our clients drive real results. That’s why we apply AI carefully, creatively, and always with a human-first mindset.

In this post, we’ll share the principles that guide our AI use — from how we approach automation to the values that shape our decision-making. Whether you’re exploring AI yourself or vetting an agency partner, this is how we think about the work ahead.

AI Is the Tool. Vision Is the Driver.

There’s a growing temptation in today’s market to treat AI as the plan — to bet big on automation without asking the deeper question: What are we trying to accomplish?

In our view, AI is only valuable when it aligns with the business goals, creative vision, and human outcomes we’re trying to achieve. That means:

- AI doesn’t replace direction. It supports it.

- AI isn’t a shortcut. It’s a catalyst.

- AI shouldn’t create noise. It should reduce it — clearing the way for better decisions and more focused human effort.

Responsible AI use starts by refusing to adopt it just because it’s available. It means choosing when, how, and why to use AI — and always staying grounded in the broader plan.

Our Principles for Responsible AI Use

We’ve shaped our internal responsible AI guidelines around both industry best practices and Daylight’s core values. These principles help us make informed decisions about when to use AI — and when not to.

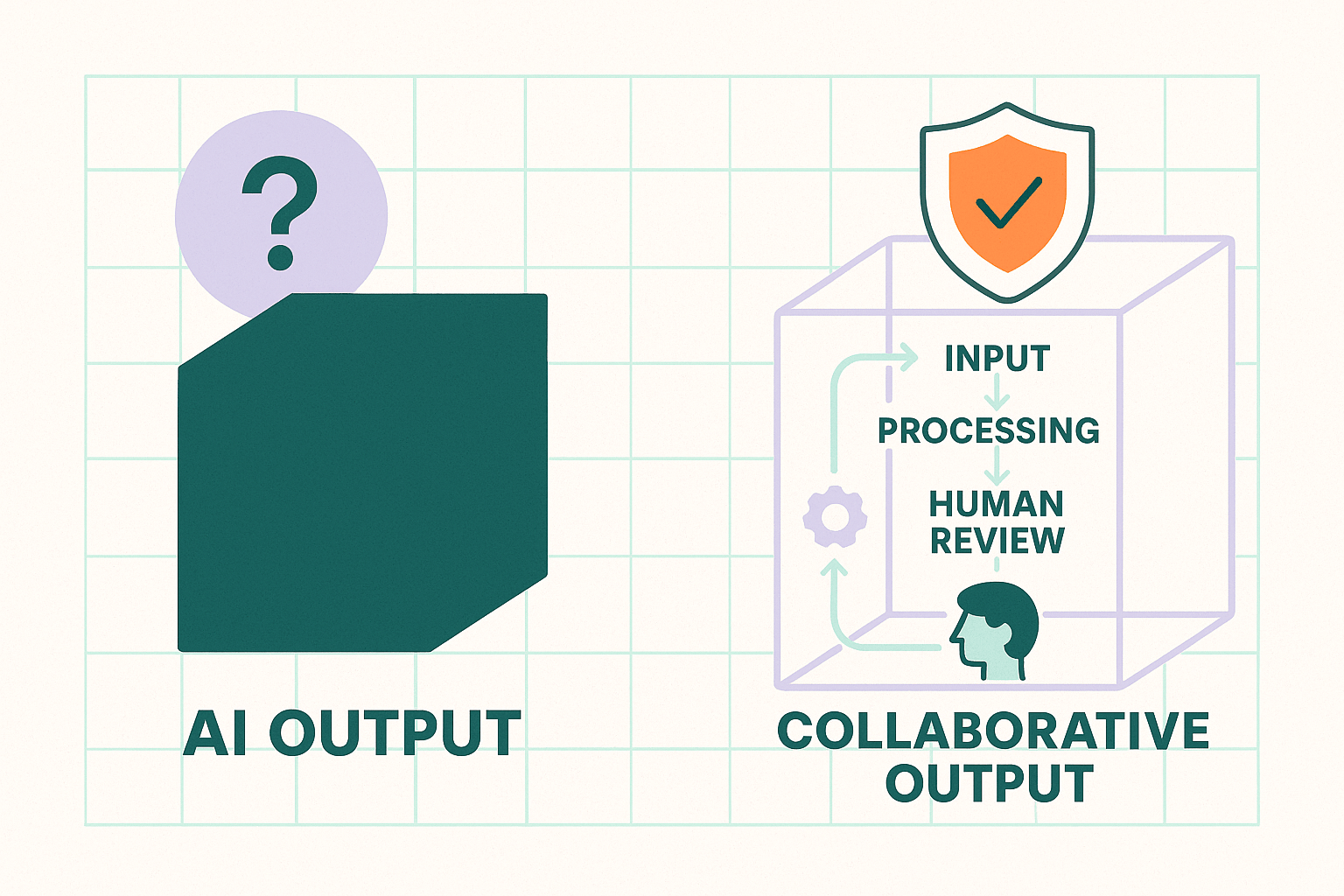

1. Transparency Over Mystery

We believe in being open about when and how AI is used — especially in ways that impact our clients’ work, data, or deliverables. That means no “black box” solutions, no secret automation, and no pretending the work is fully human when it’s not.

Being transparent builds trust — and that trust is foundational to any long-term partnership.

2. Human-Centered Judgment

AI can surface insights, automate workflows, and speed up content creation — but it can’t replace human nuance. We ensure every AI-assisted decision is reviewed, refined, and ultimately owned by a human team member. AI gives us the speed and scale; people bring the context, empathy, and integrity.

This human-in-the-loop approach is central to all of our workflows.

3. Bias Awareness & Mitigation

AI systems are only as fair as the data they’re trained on. We acknowledge that bias exists in many tools — especially large language models — and we take steps to recognize and correct it.

Whether we’re using AI to generate content, segment customers, or synthesize research, we look for blind spots, question default assumptions, and adjust outputs to reflect equity, accuracy, and inclusion.

4. Data Privacy and Stewardship

AI’s power often comes from data — but with that comes responsibility. We don’t feed client data into public models without strict review. We avoid storing sensitive materials in AI tools without proper safeguards. And we respect user privacy in how we build, analyze, and optimize digital products.

Our internal policies follow responsible AI governance practices, and we prioritize safe, secure tools that align with client requirements.

5. Purpose-Driven Application

We ask a simple question before every AI implementation: Does this help us make the work better?

Sometimes the answer is yes — AI can speed up an audit, generate ideas, or test variants at scale. But sometimes the answer is no — and we scrap the tool in favor of human exploration, conversation, or creativity.

Responsible AI use means knowing when not to use it. Efficiency should never come at the expense of quality, creativity, or integrity.

How This Looks in Practice

Here are a few examples of how these principles show up in our work:

- Content CreationWe use AI to generate first-draft outlines, tone explorations, or copy variants — but every piece of client-facing writing is reviewed, edited, and shaped by our team to meet voice, tone, and messaging standards.

- Market ResearchAI helps us analyze trends, synthesize audience responses, and organize competitor insights faster. But we never rely solely on machine interpretation — our strategists always bring human context and critical thinking.

- Workflow OptimizationWe use AI tools to automate parts of our internal QA and formatting processes, freeing up time for deeper work — and improving overall consistency.

In each case, the principle is the same: AI supports the team, it doesn’t replace them.

The Risks of Irresponsible AI Use

With every powerful tool comes risk. Here’s what can happen when AI is used irresponsibly — and why we’re so intentional about the way we apply it:

- Loss of trust. If clients or users find out content is AI-generated without disclosure, trust can erode — fast.

- Bias amplification. AI can reinforce harmful stereotypes or exclude key audiences if left unchecked.

- Security breaches. Sensitive client data used in public models can expose legal, ethical, or compliance issues.

- Lack of alignment. AI overuse can lead to content or features that look promising but drift from real business goals.

Responsible use is how we avoid these risks. It’s not just good ethics — it’s good business.

Why It Matters to Us (And to You)

At Daylight, our values aren’t add-ons — they’re embedded in how we work. Here’s how our responsible AI principles map directly to what we believe:

| Daylight Value | AI Principle It Supports |

| Integrity | Transparency, human review, accountable implementation |

| Innovation | Smart tool adoption, creative problem solving |

| Doing Good | Bias mitigation, inclusive outcomes |

| Open Communication | Clear disclosure, client alignment |

| Driving KPIs | Application tied to measurable outcomes |

We know our clients care about moving fast, working smarter, and staying competitive. We also know they care about doing things the right way. That’s why responsible AI isn’t just a stance for us — it’s a differentiator.

Looking Ahead: Our Commitment

We don’t claim to have all the answers — the AI landscape is evolving fast, and best practices will continue to shift. But our commitment remains:

- To lead with curiosity, not fear.

- To experiment with purpose.

- To stay accountable to our clients, our users, and our team.

- To use AI as a tool — not a crutch, not a gimmick, and not a replacement for clear direction.

If you’re navigating your own questions around AI — where to start, what to avoid, and how to move forward responsibly — we’re here to help.

Schedule your AI strategy meeting today.

Let’s talk about how Daylight can help you integrate AI into your workflows — with intention, clarity, and strategic focus.